I wrote my Advent of Code in D. The programming language. It was the first time I used D in earnest every day for something substantial. It was fun and I learned things along the way, such as easy metaprogramming, concurrency I could write correctly, and functional programming that doesn’t feel like I have one arm tied behind my back. I would do it all over again.

My main programming languages are C++ and Python. For me, D is the combination of the best of these two: the power of C++ with the ease of use of Python. Or to put it another way, D is the C++ I always wanted. This used to be D’s sales pitch, down to its name. There’s lots of evident C++ heritage in D. It is a C++ successor worthy of consideration.

Why D?

This is the question people always ask me. Whenever I bring up D, I am faced with the following set of standard rebuttals:

- Why not Rust?

- D? That’s still around?

- D doesn’t bring anything new or interesting

- But the GC…

I’ll answer these briefly: D was easier for me to learn than Rust, yes, it’s still around and very lively, it has lots of interesting ideas, and what garbage collector? I guess there’s a GC, but I’ve never noticed and it’s never gotten in my way.

I will let D speak for itself further below. For now, I would like to address the “why D?” rebuttals in a different way. It seems to me that people would rather not have to learn another new thing. Right now, Rust has a lot of attention and some of the code, and right now it seems like Rust may be the solution we always wanted for safe, systems-level coding. It takes effort to work on a new programming language. So, I think the “why D?” people are mostly saying, “why should I have to care about a different programming language, can’t I just immediately dismiss D and spend time learning Rust instead?”

I posit that no, you shouldn’t immediately dismiss D. If nothing else, try to listen to its ideas, many of which are distilled into Alexandrescu’s The D Programming Language. I recommend this book as good reading material for computer science, even if you never plan to write any D (as a language reference itself, it’s already dated in a number of ways, but I still recommend it for the ideas it discusses). Also browse the D Gems section in the D tour. In the meantime, let me show you what I learned about D while using it.

Writing D every day for over 25 days

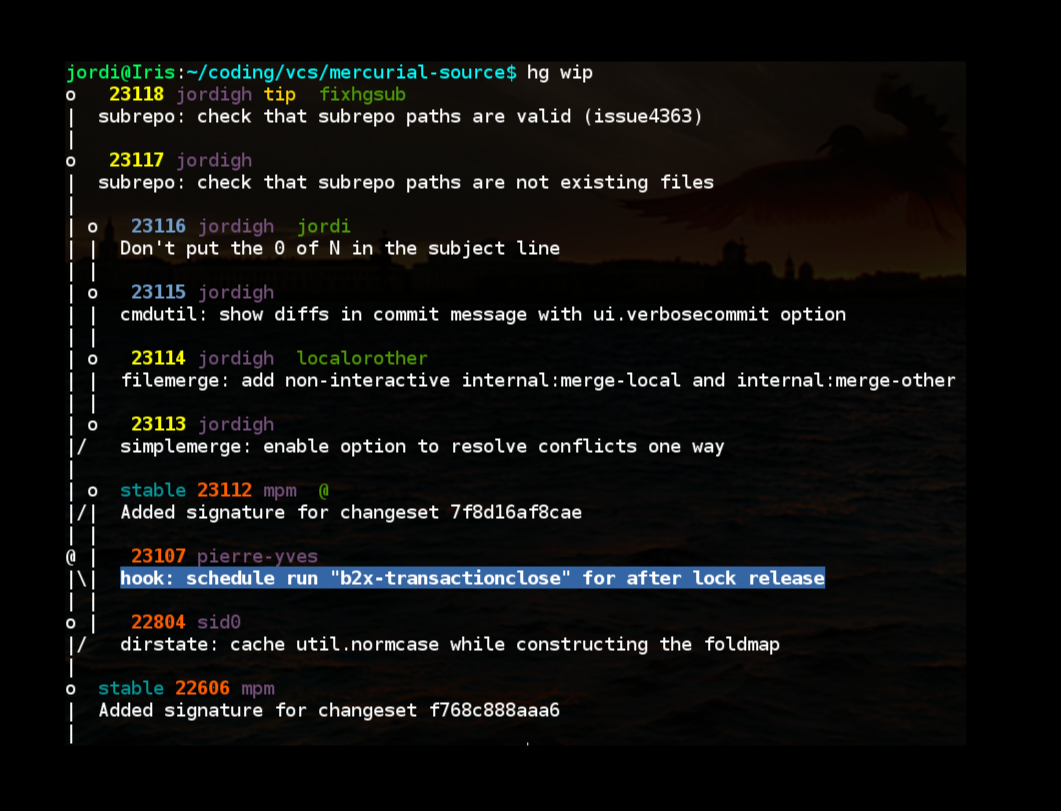

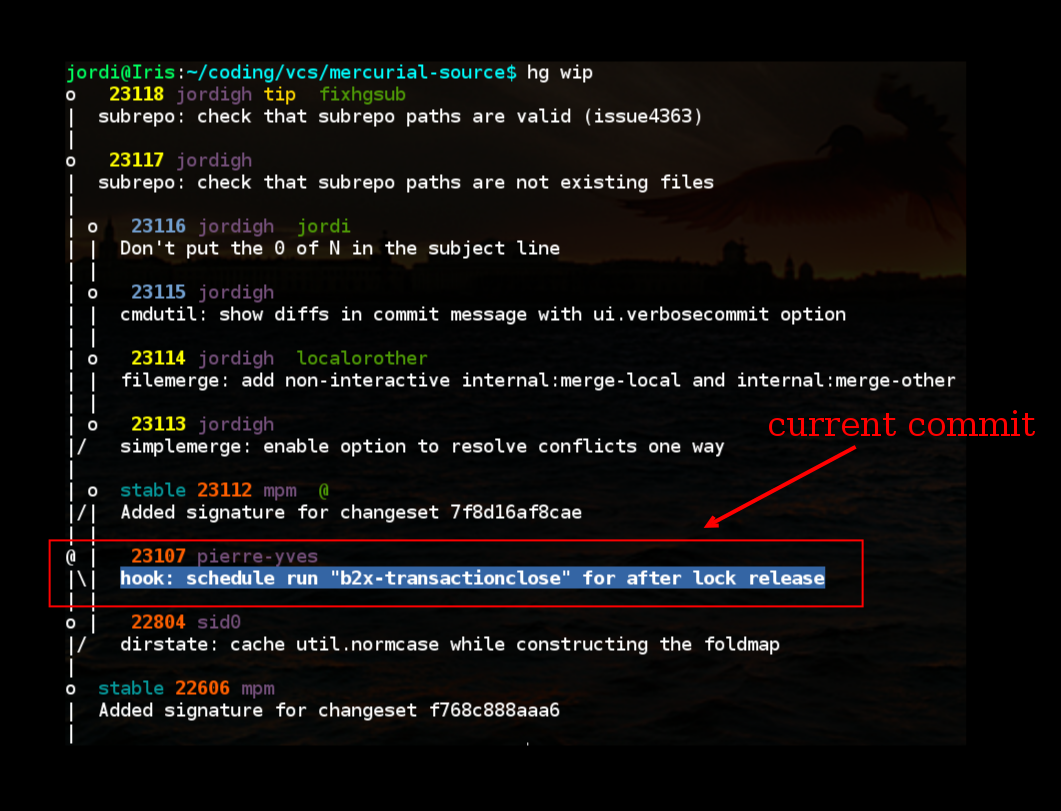

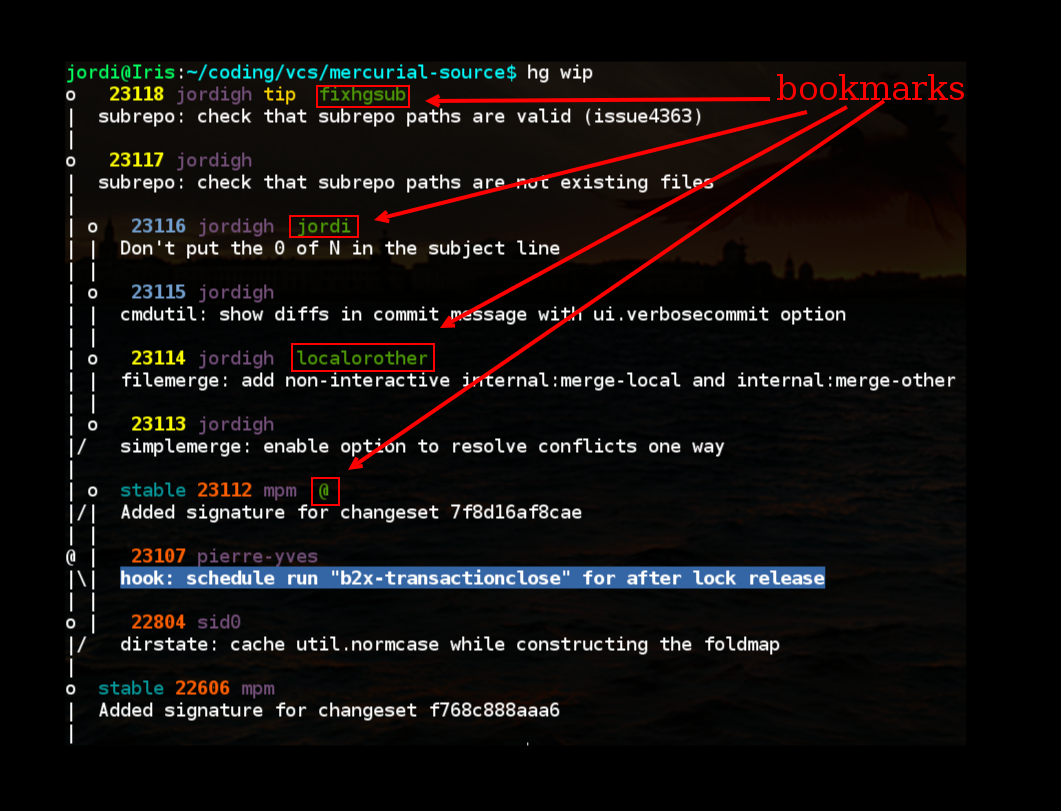

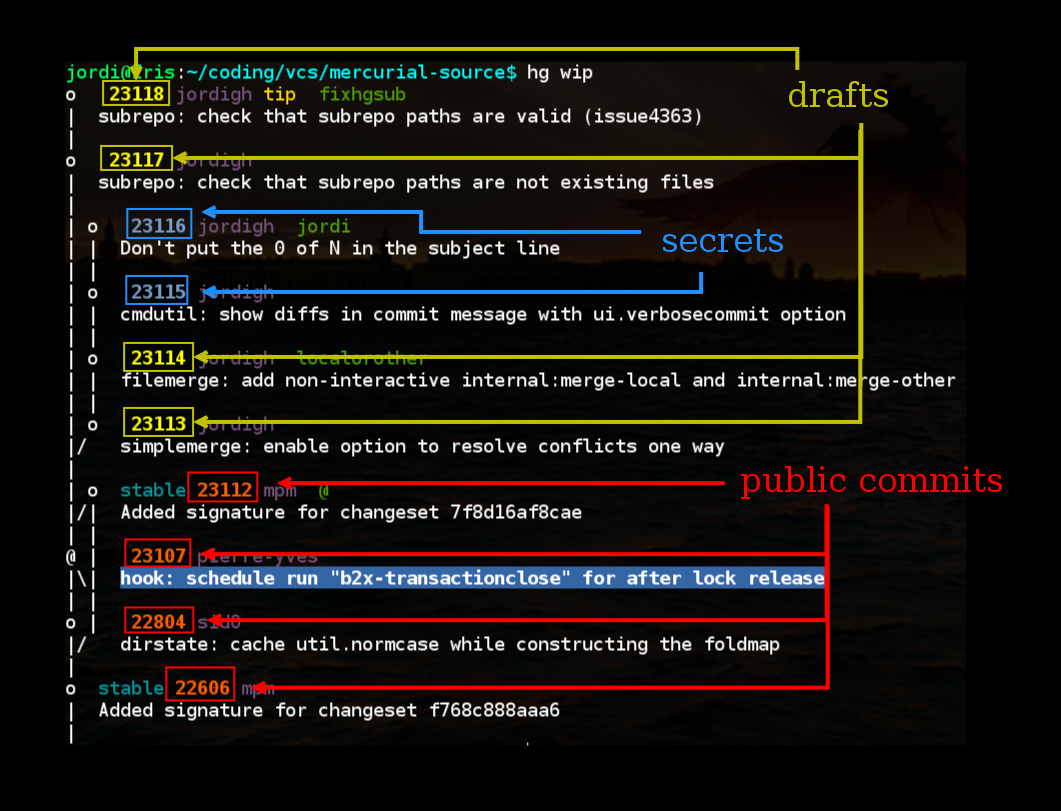

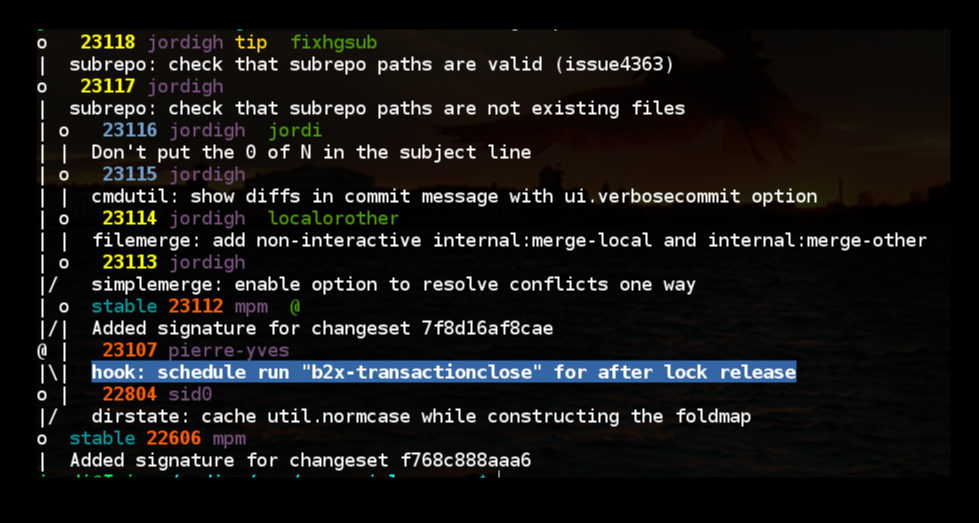

I took slightly longer than 25 days to write my advent of code solutions, partly because some stumped me a little and partly because around actual Christmas I wanted to spend time with family instead of writing code. When I was writing code, I would say that nearly every day of advent of code forced me to look into a new aspect of D. You can see my solutions in this Mercurial repository.

I am not going to go too much into details about the abstract theory concerning the solution of each problem. Perhaps another time. I will instead focus on the specific D techniques I learned about or found most useful for each.

Contents

-

Day 1: parsing arguments, type conversions, template constraints

-

Day 2: functional programming and uniform function call syntax

-

Day 3: let’s try some complex arithmetic!

-

Day 4: reusing familiar tools to find duplicates

-

Day 5: more practice with familiar tools

-

Day 6: ranges

-

Day 7: structs and compile-time regexes

-

Day 8: more compile-time fun with mixin

-

Day 9: a switch statement!

-

Day 10: learning what ranges cannot do

-

Day 11: offline hex coding

-

Day 12: for want of a set

-

Day 13: more offline coding

-

Day 14: reusing older code as a module

-

Day 15: generators, lambdas, functions, and delegates

-

Day 16: permutations with primitive tools

-

Day 17: avoiding all the work with a clever observation

-

Day 18: concurrency I can finally understand and write correctly

-

Day 19: string parsing with enums and final switches

-

Day 20: a physics problem with vector operations

-

Day 21: an indexable, hashable, comparable struct

-

Day 22: more enums, final switches, and complex numbers

-

Day 23: another opcode parsing problem

-

Day 24: a routine graph-search problem

-

Day 25: formatted reads to finish off Advent of D

Day 1: parsing arguments, type conversions, template constraints

(problem statement / my solution)

For Day 1, I was planning to be a bit more careful about everything around the code. I was going to carefully parse CLI arguments, produce docstrings and error messages when anything went wrong, and carefully validate template arguments with constraints (comparable to concepts in C++). While I could have done all of this, as days went by I tried to golf my solutions, so I abandoned most of this boilerplate. Instead, I lazily relied on getting D stack traces at runtime or compiler errors when I messed up.

As you can see from my solution, had I kept it up, the boilerplate isn’t too bad, though. Template constraints are achieved by adding if(isNumeric!numType), which checks at compile time that my template was given a template argument of the correct type, where isNumeric comes from import std.traits. I also found that getopt was a sufficiently mature standard library for handling command-line parsing. It’s not quite as rich as Python’s argparse, merely sufficient. This about shows all it can do:

string input;

auto opts = getopt(

args,

"input|i", "Input captcha to process", &input

);

if (opts.helpWanted) {

defaultGetoptPrinter("Day 1 of AoC", opts.options);

}

Finally, a frequent workhorse that appeared from Day 1 was std.conv for parsing strings into numbers. A single function, to is surprisingly versatile and does much more than that, by taking a single template argument for converting (not casting) one type into another. It knows not only how to parse strings into numbers and vice versa, but also how to convert numerical types keeping as much precision as possible or reading list or associative array literals from strings if they are in their standard string representation. It’s a good basic example of D’s power and flexibility in generic programming.

Day 2: functional programming and uniform function call syntax

(problem statement / my solution)

For whatever reason, probably because I was kind of trying to golf my solutions, I ended up writing a lot of functionalish code, with lots of map, reduce, filter, and so forth. This started early on with Day 2. D is mostly unopinionated about which style of programming one should use and offers tools to do object-orientation, functional programming, or just plain procedural programming, presenting no obstacle to the mixing these styles. Lambdas are easily written inline with concise syntax, e.g. x => x*x, and the basic standard functional tools like map, reduce, filter and so on are available.

D’s approach to functional programming is quite pragmatic. While I rarely used it, because I wasn’t being too careful for these solutions, D functions can be labelled pure, which means that they can have no side effects. However, this still lets them do local impure things such as reassigning a variable or having a for loop. The only restriction is that all of their impurity must be “on the stack”, and that they cannot call any impure functions themselves.

Another feature that I came to completely fall in love with was what they call uniform function call syntax (UFCS). With some caveats, this basically means that

is just sugar for

If the function only has one argument, the round brackets are optional and foo.bar is sugar for bar(foo). This very basic syntactic convenience makes it so easy and pleasant to chain function calls together, lending itself to making it more inviting to write functional code. It also is a happy unification between OOP and FP, because syntactically it’s the same to give an object a new member function as it is to create a free-standing function whose first argument is the object.

Day 3: let’s try some complex arithmetic!

(problem statement / my solution)

For me, 2-dimensional geometry is often very well described by complex numbers. The spiral in the problem here seemed easy to describe as an associative array from complex coordinates to integer values. So, I decided to give D’s std.complex a try. It was easy to use and there were no big surprises here.

Day 4: reusing familiar tools to find duplicates

(problem statement / my solution)

There weren’t any new D techniques here, but it was nice to see how easy it was to build a simple word counter from D builtins. Slightly disappointed that this data structure itself wasn’t builtin like Python’s own collections.Counter but hardly an insurmountable problem.

Day 5: more practice with familiar tools

(problem statement / my solution)

Again, not much new D here. I like the relative ease with which it’s possible to read integers into a list using map and std.conv.to.

Day 6: ranges

(problem statement / my solution)

There’s usually a fundamental paradigm or structure in programming languages out of which everything else depends on. Haskell has functions and monads, C has pointers and arrays, C++ has classes and templates, Python has dicts and iterators, Javascript has callbacks and objects, Rust has borrowing and immutability. Ranges are one of D’s fundamental concepts. Roughly speaking, a range is anything that can be iterated over, like an array or a lazy generator. Thanks to D’s powerful metaprogramming, ranges can be defined to satisfy a kind of compile-time duck typing: if it has methods to check for emptiness, get the first element, and get the next element, then it’s an InputRange. This duck typing is kind of reminiscent of type classes in Haskell. D’s general principle of having containers and algorithms on those containers is built upon the range concept. Ranges are intended to be simpler reformulation of iterators from the C++ standard libary.

I have been using ranges all along, as foreach loops are kind of like sugar for invoking those methods on ranges. However, for day 6 I actually wanted to use a method that had to invoke an std.range method, enumerate. It simply iterates over a range while simultaneously producing a counter. This I used to write some brief code to obtain both the maximum of an array and the index in which it occurs.

Another range-related feature that appears for the first time here is slicing. Certain random-access ranges which allow integer indexing also allow slicing. The typical method to remove elements from an array is to use this slicing. For example, to remove the first five elements and the last two elements from an array:

Here the dollar sign is sugar for arr.length and this removal is simply done by moving some start and end pointers in memory — no other bytes are touched.

The D Tour has a good taste of ranges and Programming in D goes into more depth.

Day 7: structs and compile-time regexes

(problem statement / my solution)

My solution for this problem was more complicated, and it forced me to break out an actual tree data structure. Because I wasn’t trying to be particularly parsimonious about memory usage or execution speed, I decided to create the tree by having a node struct with a global associative array indexing all of the nodes.

In D, structs have value semantics and classes have reference semantics. Roughly, this means that structs are on the stack, they get copied around when being passed into functions, while classes are always handled by reference instead and dynamically allocated and destroyed. Another difference between structs and classes is that classes have inheritance (and hence, polymorphic dispatch) but structs don’t. However, you can give structs methods, and they will have an implicit this parameter, although this is little more than sugar for free-standing functions.

Enough on OOP. Let’s talk about the really exciting stuff: compile-time regular expressions!

For this problem, there was some input parsing to do. Let’s look at what I wrote:

void parseLine(string line) {

static nodeRegex = regex(r"(?P<name>\w+) \((?P<weight>\d+)\)( -> (?P<children>[\w,]+))?");

auto row = matchFirst(line, nodeRegex);

// init the node struct here

}

The static keyword instructs D that this variable has to be computed at compile-time. D’s compiler basically has its own interpreter that can execute arbitrary code as long as all of the inputs are available at compile time. In this case, this parses and compiles this regex into the binary. The next line, where I call matchFirst on each line, is done at runtime, but if for whatever reason I had these strings available at compile time (say, defined as a big inline string a few lines above the same source file), I could also do the regex parsing at compile time if I wanted to.

This is really nice. This is one of my favourite D features. Add a static and you can precompute into your binary just about anything. You often don’t even need any extra syntax. If the compiler realises that it has all of the information at compile time to do something, it might just do it. This is known as compile-time function execution, hereafter, CTFE. The D Tour has a good overview of the topic.

Day 8: more compile-time fun with mixin

(problem statement / my solution)

Day 8 was another problem where the most interesting part was parsing. As before, I used a compile-time regex. But the interesting part of this problem was the following bit of code for parsing strings into their corresponding D comparison operation, as I originally wrote it:

auto comparisons = [

"<": function(int a, int b) => a < b,

">": function(int a, int b) => a > b,

"==": function(int a, int b) => a == b,

"<=": function(int a, int b) => a <= b,

">=": function(int a, int b) => a >= b,

"!=": function(int a, int b) => a != b,

];

Okay, this isn’t terrible. It’s just… not very pretty. I don’t like that it’s basically the same line repeated six times. I furthermore also don’t like that within each line, I have to repeat the operator in the string part and in the function body. Enter the mixin keyword! Basically, string mixins allow you to evaluate any string at compile time. They’re kind of like the C preprocessor, but much safer. For example, string mixins only evaluate complete expressions, so no shenanigans like #define private public are allowed. My first attempt to shorten the above looked like this:

bool function(int,int)[string] comparisons;

static foreach(cmp; ["<", ">", "==", "<=", ">=", "!="]) {

comparisons[cmp] = mixin("function(int a, int b) => a "~cmp~" b");

}

Since I decided to use a compile-time static loop to populate my array, I now needed a separate declaration of the variable which forced me to spell out its ungainly type: an associative array that takes a string and returns a function with that signature. The mixin here takes a concatenated string that evaluates to a function.

However, this didn’t work for two reasons!

The first one is that static foreach was introduced on September 2017. The D compilers packaged in Debian didn’t have it yet when I wrote that code! The second problem is more subtle: initialisation of associative arrays currently cannot be statically done because their internal data structures rely on runtime computations, according to my understanding of this discussion. They might fix it some day?

So, next best thing is my final answer:

bool function(int,int)[string] comparisons;

auto getComparisons(Args...)() {

foreach(cmp; Args) {

comparisons[cmp] = mixin("function(int a, int b) => a "~cmp~" b");

}

return comparisons;

}

shared static this() {

comparisons = getComparisons!("<", ">", "==", "<=", ">=", "!=");

}

Alright, by size this is hardly shorter than the repetitive original. But I still think it’s better! It has no dull repetition where bugs are most often introduced, and it’s using a variable-argument templated function so that the mixin can have its values available at compile time. It uses the next best thing to compile-time initialisation, which is a module initialiser shared static this() that just calls the function to perform the init.

Day 9: a switch statement!

(problem statement / my solution)

Day 9 was a simpler parsing problem, so simple that instead of using a regex I decided to just use a switch statement. There isn’t anything terribly fancy about switch statements, and they work almost exactly the same as they do in other languages. The only distinct features of switch statements in D is that they work on numeric, string, or bool types and that they have deprecated implicit fallthrough. Fallthrough instead must be explicitly done with goto case; or will be once the deprecation is complete.

Oh, and you can also specify ranges for a case statement, e.g.

case 'a': .. case 'z':

// do stuff with lowercase ASCII

break;

It’s the small conveniences that make this pleasant. Programming in D has a good discussion on switch statements.

Day 10: learning what ranges cannot do

(problem statement / my solution)

So, superficially, you might think that expressions like arr[2..$-2], which is valid, would also allow for things like arr[$-2..1] to traverse the array in reverse order or some other syntax for having a different step size than +1. At least I did. These kinds of array indexing are common in numeric-based arrays such as Octave, Julia, R, or Python’s numpy. So for day 10’s hash, which requires reversing an array, I thought I could just do that.

Turns out that the language doesn’t have syntax to allow this, but after a quick trip to the standard library I found the necessary functions. What I thought could be written as

instead became

Other than this minor discovery about ranges, Day 10 was more about getting the algorithm right than using any specialised D utilities. Since real hashes typically allow several sizes, I templated the hash functions with the total size, rounds of hashing, and chunk size, with a template constraint that the chunk size must divide the total size:

auto getHash(int Size=256, int Rounds=64, int ChunkSize=16)(string input)

if( Size % ChunkSize == 0)

{

// ...

}

Nothing new here. I just like that template constraints are so easy to write.

Day 11: offline hex coding

(problem statement / my solution)

I did most of Day 11 on paper. It took me a while to figure out a proper hex coordinate system and what the distance function in that coordinate system should be. I had seen hex coordinates from playing Battle for Wesnoth, but took me a while to figure them out again. Once I had that, the actual D code is pretty simple and used no techniques I hadn’t seen before. I think this is the first time I used the cumulativeFold function, but other than that, nothing to see here. An immutable global associative array populated at module init time like before,

pure static this(){

directions = [

"ne": [1,1],

"n": [0,1],

"nw": [-1,0],

"sw": [-1,-1],

"s": [0,-1],

"se": [1,0],

];

}

and that’s it.

Day 12: for want of a set

(problem statement / my solution)

The only new D technique for this problem was that I decided to use a set structure to keep track of which graph nodes had been visited. The only problem is that D doesn’t have a built-in set structure (yet?), but it does have a setDifference function. It’s a bit clunky. It only works on ordered arrays, but that was sufficient for my purpose here, and probably not much worse than hashing with a traditional set structure would have been.

One further observation: D has an in keyword, which can be used to test membership, like in Python (it also has an unrelated use for defining input and output arguments to functions), but unlike Python, only for associative arrays. This makes sense, because the complexity of testing for membership for other data structures can vary widely depending on the structure and the chosen algorithm, and there isn’t a clear universal choice like there is for associative arrays.

If desired, however, it’s possible to define the in operator for any other class, like so:

bool opBinaryRight!("in")(T elt) {

// check that elt is in `this`

}

I would assume that’s what you could use to write a set class for D.

Day 13: more offline coding

(problem statement / my solution)

This one is another where I did most of the solution on paper and thus managed to write a very short program. No new D techniques here, just the usual functionalish style that I seem to be developing.

Day 14: reusing older code as a module

(problem statement / my solution)

The problem here is interesting because I’ve solved this labelling of connected components problem before in C++ for GNU Octave. I wrote the initial bwlabeln implementation using union-find. I was tempted to do the same here, but I couldn’t think of a quick way to do so, and talking to others in the #lobsters channel in IRC, I realised that a simpler recursive solution would work without overflowing the stack (because the problem is small enough, not because a stack-based algorithm is clever).

The interesting part is reusing an earlier solution, the hashing algorithm from Day 10. At first blush, this is quite simple: every D file also creates its own module, namespaced if desired by directories. It’s very reminiscent of Python’s import statement and module namespacing. The only snag is that my other file has a void main(string[] args) function and so does this one. The linker won’t like that duplicate definition of symbols. For this purpose, D offers conditional compilation, which in C and C++ is usually achieved via a familiar C preprocessor macro idiom.

In D, this idiom is codified into the language proper via the version kewyord, like so

version(standalone) {

void main(string[] args){

// do main things here

}

}

This instructs the compiler to compile the inside of the version block only if an option called “standalone” is passed in,

gdc -O2 -fversion=standalone app.d -o day10

or, with regrettably slightly different flags,

ldc2 -O2 -d-version=standalone app.d -of day10

There are other built-in arguments for version, such as “linux” or “OSX” to conditionally compile for a particular operating system. This keyword offers quite a bit of flexibility for conditional compilation, and it’s a big improvement over C preprocessor idioms.

Day 15: generators, lambdas, functions, and delegates

(problem statement / my solution)

This problem was an opportunity to test out a new function, generate, which takes a function and iterates it repeatedly on a range. Haskell calls this one iterate, which I think is a better name. It’s also a lazy generator, so you need something like take to say how much of the generator do you want to use. For example, the Haskell code

can be translated into D as

auto x = 1;

auto pows = generate!(x => x*2).take(11);

There are other examples in the documentation.

Let also take a moment here to talk about the different anonymous functions in D. The following both declare a function that squares its input:

function(int a) { return a^^2;}

delegate(int a) { return a^^2;}

The difference is just a question of closure. The delegate version carries a hidden pointer to its enclosing scope, so it can dynamically close over the outer scope variables. If you can’t afford to pay this runtime penalty, the function version doesn’t reference the enclosing scope (no extra pointer). So, for a generator, you typically want to use a delegate, since you want the generator to remember its scoped variables across successive calls, like what I did:

auto generator(ulong val, ulong mult) {

return generate!(delegate(){

val = (val * mult) % 2147483647;

return val;

}

);

}

This function returns a generator range where each entry will result in a new entry of this pseudorandom linear congruence generator.

The delegate/function is part of the type, and can be omitted if it can be inferred by context (e.g. when passing a function into another function as an argument). Furthermore, there’s a lambda shorthand that I have been using all along, where the {return foo;} boilerplate can be shortened to just => like so:

This form is only valid where there’s enough context to infer if it’s a delegate or a function, as well as the type of a itself. More details in the language spec.

Day 16: permutations with primitive tools

(problem statement / my solution)

This permutations problem made me reach for the std.algorithm function bringToFront for cyclicly permuting an array in place like so,

bringToFront(progs[rot..$], progs[0..rot]);

It’s a surprisingly versatile function that can be used to perform more tricks than cyclic permutations. Its documentation is worth a read.

I also ran into a D bug here. I had to create a character array from an immutable input string, but due to Unicode-related reasons that D has for handling characters especially, I had to cast to ubyte[] instead of char[].

Besides that, for the second part where you had to realise that permutations cannot have too big of an orbit, I also ended up using a string array with the canFind from std.algorithm. I would have preferred a string set with hashing instead of linear searching, but it didn’t make a huge difference for the size of this problem.

I really want sets in the D standard library. Maybe I should see what I can do to make them happen.

Day 17: avoiding all the work with a clever observation

(problem statement / my solution)

This puzzle is a variation of the Josephus problem. I needed some help from #lobsters in IRC to figure out how to solve it. There aren’t any new D techniques, just some dumb array concatenation with the tilde operator for inserting elements into an array:

circ = circ[0..pos] ~ y ~ circ[pos..$];

The second part can be solved via the simple observation that you only

need to track one position, the one immediately following zero.

Day 18: concurrency I can finally understand and write correctly

(problem statement / my solution)

This day was an exciting one for me. Discussing this problem with others, it seems many people had much difficulty solving this problem in other programming languages. Most people seemed to have to emulate their own concurrency with no help from their programming language of choice. In contrast, D absolutely shined here, because it is based on the actor concurrency model (message passing), which precisely fits the problem statement. (There are other concurrency primitives in case the actor concurrency model isn’t sufficient, but it was sufficient for me.)

The basic idea of concurrency in D is that each thread of execution localises all of its state. By default, threads share no data. In order to communicate with each other, threads pass messages. A thread can indicate at any time when it’s ready to send or receive messages. Messages can be any type, and each thread says what type it’s expecting to receive. If a thread receives a type it’s not prepared to handle, it will throw an exception.

There are more details, such as what happens if a thread receives too many messages but doesn’t respond to any of them. Let’s not go into that now. Basic idea: threads get spawned, threads send and receive messages.

Let’s spend a little bit of time looking at the relevant functions and types I used, all defined in std.concurrency

- spawn

-

Starts a thread of execution. The first argument is a reference to the function that this thread will execute, along with any arguments that function may take. Returns a

Tid type, a thread ID. This is used as the address to send messages to. A thread can refer to its parent thread via the special variable ownerTid.

Unless explicitly declared shared, the arguments of a threaded function must be immutable. This is how the compiler guarantees no race conditions when manipulating those variables. Of course, with shared variables, the programmer is signalling that they are taking over synchronisation of that data, which may require using low-level mutexes.

- send

- Send a message to a particular thread. The first argument is the thread id. The other arguments can be anything. It’s up to the receiving thread to handle the arguments it receives.

- receiveOnly

- Indicate that this thread is ready to receive a single type, and returns the value of that type. The type must of course be specified as a compile-time argument.

- receive

- Indicates what to do with any of several possible types. The arguments to this function are a collection of functions, whose parameters types will be dynamically type-matched with received types. I didn’t need this function, but I wanted to mention it exists.

- receiveTimeout

- The problem statement is designed to deadlock. Although there probably is a more elegant solution, timing out on deadlock was the solution I wrote. This function does just that: listens for a message for a set of amount of time. If a message is received in the designated time, its handler function is executed and

receiveTimeout returns true. If the timeout happens, it returns false instead.

Armed with these tools, the solution was a breeze to write. I first spawn two threads and save their thread ids,

auto tid1 = spawn(&runProgram, opcodes, 0);

auto tid2 = spawn(&runProgram, opcodes, 1);

Each of the two threads defined by runProgram then immediately stops, waiting for a thread id, to know whom to talk to,

void runProgram(immutable string[] opcodes, ulong pid) {

auto otherProg = receiveOnly!Tid();

// ...

}

The parent thread then connects the two worker threads to each other,

send(tid1, tid2);

send(tid2, tid1);

And off they go, the two threads run through the opcodes in the problem statement, and eventually they deadlock, which I decided to handle with a timeout like so,

case "rcv":

if (!receiveTimeout(100.msecs, (long val) {regs[reg] = val;})) {

goto done;

}

// no timeout, handle next opcode

goto default;

After the thread has timed out, it signals to the parent thread that it’s done,

done:

send(ownerTid, thisTid, sent);

The parent in turn receives two tuples with thread id and computed value from each thread, and based on that decides what to output, after figuring out which thread is which,

// Wait for both children to let us know they're done.

auto done1 = receiveOnly!(Tid, long);

auto done2 = receiveOnly!(Tid, long);

if (done1[0] == tid2) {

writeln(done1[1]);

}

else {

writeln(done2[1]);

}

And voilà, concurrency easy-peasy.

Day 19: string parsing with enums and final switches

(problem statement / my solution)

The only new D technique here are final switches. A final switch is for enum types, to make the compiler enforce you writing a case to match all of the possible values. That’s what I did here, where I wanted to make sure I matched the up, down, left, and right directions:

final switch(dir){

case DIR.d:

j++;

break;

case DIR.u:

j--;

break;

case DIR.l:

i--;

break;

case DIR.r:

i++;

break;

}

The rest of the problem is merely some string parsing.

Day 20: a physics problem with vector operations

(problem statement / my solution)

I have not yet done serious numerical work with D, but I can see that it has all of the necessary ingredients for it. One of the most obvious amongst these is that it has built-in support for writing vector instructions. Given struct to model a particle in motion,

struct particle {

double[3] pos;

double[3] vel;

double[3] acc;

}

the following function return another particle where all of the vectors have been divided by its norm (i.e. normalised to 1):

auto unit(particle p) {

auto

pos = p.pos,

vel = p.vel,

acc = p.acc;

pos[] /= pos.norm;

vel[] /= vel.norm;

acc[] /= acc.norm;

particle u = {pos, vel, acc};

return u;

}

This vec[] /= scalar notation divides all of the vector by the given scalar. But that’s not all. You can also add or multiply vectors elementwise with similar syntax,

double[3] diff1 = p.pos, diff2 = p.vel;

diff1[] -= p.vel[];

diff2[] -= p.acc[];

Here diff1 and diff2 give the vector difference between the position and velocity, and respectively, velocity in acceleration (I use the criterion of all of three of these being mostly collinear to determine if all particles have escaped the system and thus can no longer interact with any other particle).

This is mostly syntactic sugar, however. Although the D compiler can sometimes turn instructions like these into native vector instructions like AVX, real vectorisation has to be done via some standard library support

Day 21: an indexable, hashable, comparable struct

(problem statement / my solution)

I was happy to recognise, via some string mixins, that I could solve this problem by considering the dihedral group of the square:

immutable dihedralFun =

"function(ref const Pattern p) {

auto n = p.dim;

auto output = new int[][](n, n);

foreach(i; 0..n) {

foreach(j; 0..n) {

output[i][j] = p.grid[%s][%s];

}

}

return output;

}";

immutable dihedralFourGroup = [

mixin(format(dihedralFun, "i", "j")),

mixin(format(dihedralFun, "n-i-1", "j")),

mixin(format(dihedralFun, "i", "n-j-1")),

mixin(format(dihedralFun, "n-i-1", "n-j-1")),

mixin(format(dihedralFun, "j", "i")),

mixin(format(dihedralFun, "n-j-1", "i")),

mixin(format(dihedralFun, "j", "n-i-1")),

mixin(format(dihedralFun, "n-j-1", "n-i-1")),

];

This isn’t a new technique, but I’m really happy how it turns out. Almost like lisp macros, but without devolving into the lawless chaos of Python or Javascript eval or C preprocessor macros. As an aside, the format function accepts formatted strings with POSIX syntax for positional arguments, but there isn’t anything built-in as nice as Perl string interpolation or Python format strings.

The real meat of this problem was to implement a grid structure that could be hashed, compared, and indexed. This is is all done with a number of utility functions. For indexing and slicing, the basic idea is that for a user-defined type foo

is sugar for

foo.opIndex(foo.opSlice(bar, baz))

so those are the two functions you need to implement for indexing and slicing. Similarly, for equality comparison and hashing, you implement opEquals and toHash respectively. I relied on the dihedral functions above for comparison for this problem.

After implementing these functions for a struct (recall: like a class, but with value semantics and no inheritance), the rest of the problem was string parsing and a bit of logic to implement the fractal-like growth rule.

Day 22: more enums, final switches, and complex numbers

(problem statement / my solution)

Another rectangular grid problem, which I again decided to represent via complex numbers. The posssible infection states given in the problem I turned into an enum which I then checked with a final switch as before. The grid is then just an associative array from complex grid positions to infection states.

Nothing new here. By now these are all familiar tools and writing D code is becoming habitual for me.

Day 23: another opcode parsing problem

(problem statement / my solution)

This problem wasn’t technically difficult from a D point of view. The usual switches and string parsing techniques of days 8 and 18 work just as well. In fact, I started with the code of day 18 and modified it slightly to fit this problem.

The challenge was to statically analyse the opcode program to determine that it is implementing a very inefficient primality testing algorithm. I won’t go into an analysis of that program here because others have already done a remarkable job of explaining it. Once this analysis was complete, the meat of the problem then becomes to write a faster primality testing algorithm, such as dumb (but not too dumb) trial division,

auto isComposite(long p) {

return iota(2, sqrt(cast(double) p)).filter!(x => p % x == 0).any;

}

and use this test at the appropriate location.

Day 24: a routine graph-search problem

(problem statement / my solution)

This problem required some sort of graph structure, which I implemented as an associative array from node ids to all edges incident to that node. The problem then reduces to some sort of graph traversal (I did depth-first search), keeping track of edge weights.

No new D techniques here either, just more practice with my growing bag of tricks.

Day 25: formatted reads to finish off Advent of D

(problem statement / my solution)

The final problem involved parsing a slightly more verbose DSL. For this, I decided to use formatted strings for reading, like so,

auto branchFmt =

" - Write the value %d.

- Move one slot to the %s.

- Continue with state %s.

";

auto parseBranch(File f) {

int writeval;

string movedir;

char newstate;

f.readf(branchFmt, &writeval, &movedir, &newstate);

return Branch(writeval ? true : false, movedir == "left" ? -1 : 1, newstate);

}

This is admittedly a bit brittle. Even the type check between the formatted string and the expected types is done at runtime (but newer D versions have a compile-time version of readf for type-checking the format string). An error here can cause exceptions at runtime.

Other than this, the only new technique here is that I actually wrote a loop to parse the program file:

auto parseInstructions(File f) {

Instruction[char] instructions;

while(!f.eof) {

char state;

f.readf("In state %s:\n", &state);

f.readln; // "If the current value is 0:"

auto if0 = f.parseBranch;

f.readln; // "If the current value is 1:"

auto if1 = f.parseBranch;

f.readln; // Blank line

instructions[state] = Instruction(if0, if1);

}

return instructions;

}

A small comfort here is that checking for eof in the loop condition actually works. This is always subtly wrong in C++ and I can never remember why.

What’s left of the problem is absolutely routine D by now: associative arrays, UFCS, foreach loops, standard library utilities for summing and iterating and so forth. A few of my favourite things.

Concluding remarks

The best part is that my code was also fast! I was comparing my solutions above with someone else who was doing their Advent of Code in C. I could routinely match his execution speed on the problems where we bothered to compare, whenever we wrote similar algorithms. I’m eager to see what D can do when faced with some real number-crunching.

After all this, I have come to appreciate D more, as well as seeing some of its weak points. I think I have already raved enough about how much I like its functional style, its standard library, its type-checking, and its compile-time calculation. I also ran into a few bugs and deprecated features. I have also observed some language questionable design choices. Not once did I notice having a garbage collector. It was lots of fun.

Merry belated Christmas!